SMArtInt+

Our Modelica Library for simple and user-friendly generation and integration of neuronal networks in Modelica models

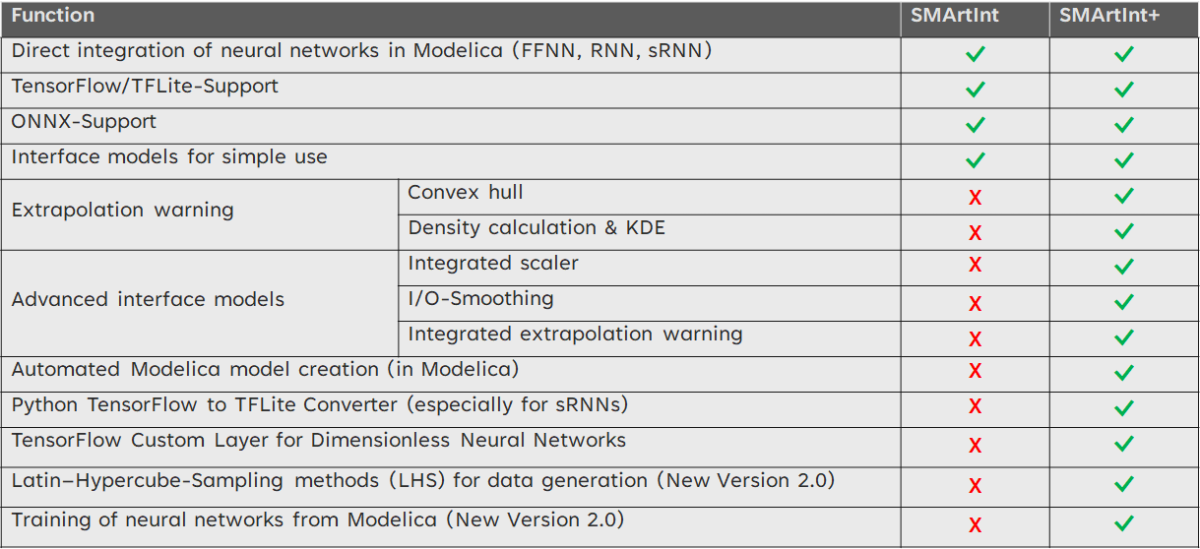

There is an increasing need for hybrid models consisting of physical and surrogate as well as data-driven models. XRG has developed an interface, the SMArtInt+ Library, that enables the easy generation and integration of neural networks from different sources and types. SMArtInt stands for Smart Integration of Artificial Intelligence in Modelica. The reduced open source version SMArtInt, which is already available in the Dymola Library Portfolio, has been successfully tested in many real applications and is available free of charge on github.

There are numerous AI frameworks for creating neural networks: e.g. TensorFlow, PyTorch, Flux.jl (Julia) and others. The SMArtInt+ library enables you to generate and integrate machine learning models from different sources in a standardized, user-friendly and efficient way. The neural networks are integrated as external source code that can be changed independently of the Modelica code. Currently, SMArtInt+ supports TensorFlow, TensorFlow lite and ONNX models, which can be exported to Dymola and OpenModelica or imported from Modelica blocks.

- Quasistatic Feed Forward Neural Networks (FFNN)

- Dynamic Recurrent Neural Networks (RNN), stateful or non-stateful

- Neural Ordinary Differential Equations (NODE)

- Generative models, working with Encoder/Decoder

- Surrogate models of complex applications like BNODE (Balanced neural ordinary differential equations)

- Latin Hypercube sampling for generating training data from Modelica models.

- Training of neural TensorFlow networks in Dymola or OpenModelica with measurement or generated data.

- Automatic Modelica block generation from an external neural network

- Extrapolation warning (animated and as a prompt warning) if the inputs leave the convex hull of the training data or the inputs are in an area with low training data density

- Additional I/O features: scaling and smoothing

- Python converter for sRNN from TensorFlow to TensorFlow lite

- Dedimensionalization of neural networks to make them independent of absolute inputs

- and of course our XRG product support

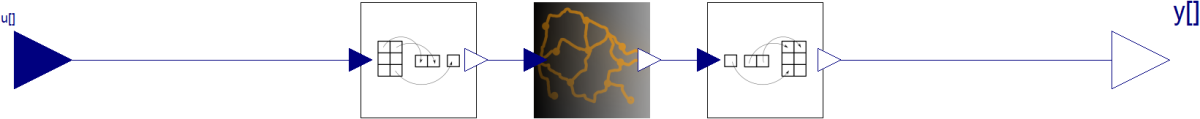

The new toolchain for generation and integration of neural networks using Modelica tools

The new Modelica- and Python-based toolchain is available in the simulators mentioned below. It enables seamless creation of neural networks without having to leave the simulation tool.

- Step 1

If training data is not available, you can automatically create it from a physical (or mathematical) Modelica model. You only need to define the required outputs to generate your training data using a Latin Hypercube method. - Step 2

Create any configuration of a neural network (FNN, RNN, etc.) from parameterisable Modelica blocks and conduct the training from the Modelica tool. The outcome is stored as an external TensorFlow neural network file. - Step 3

Integrate the generated neural network using an automatic block generator and link it to your physical or mathematical models using Modelica standard interfaces. Any potential extrapolation error can be visually checked with the animated extrapolation block.

Use Case

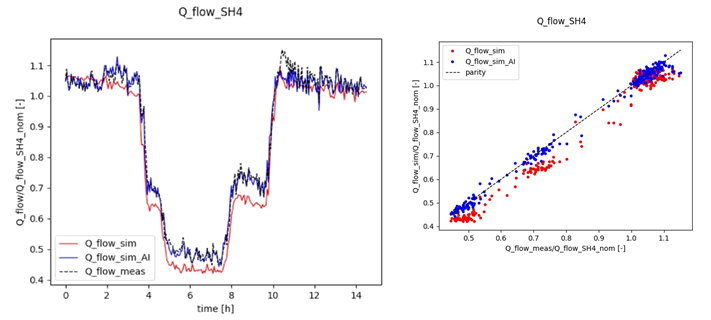

In the DIZPROVI research project, a surrogate model of a steam heat exchanger was created using measurement data. The results of the hybrid data-driven model (blue line and dots) are shown in the following graphics in comparison to the purely physical model (red line and dots). A scenario was compared using measurement data that was not part of the training data set.

Compatibility

The library has been tested and is available for the current versions of:

- Dymola

- OpenModelica